CSCI 340 Exam 2

Duration: 75 Minutes

Closed books, notes, and cell phones.

Q1 [16 points]. Short Answer Questions (4 points each)

(a) List 2 conditions under which a multi-threaded process terminates.

Instructor Solution

A multi-threaded process terminates under two conditions:

- One of the threads call the “exit()” system call terminating the process. When the process, which is the container for all threads, terminates, all threads terminate with it.

- All threads inside the process terminate by calling pthread_exit(). When the last thread of a process terminates, the OS terminates the process because there is nothing else to run. Remember, thread is the unit of scheduling, not the process.

(b) Define concurrency and parallelism and briefly explain the difference between the two.

Instructor Solution

Concurrency is the ability of a system to handle multiple tasks or threads, often by interleaving their execution via a pre-emptive scheduling algorithm such as RR so that each task has the impression that they are the only one running in the system and all tasks making progress. Parallelism is the ability of the system to run multiple tasks or thread simultaneously, which requires multiple CPUs or cores, where different cores can run different tasks/threads in parallel.

(c) Consider a set of “n” tasks with known burst times r1, r2, .., rn to be run on a uniprocessor machine. Which scheduling algorithm will result in the maximum throughput, i.e., minimum average turnaround time. Justify your answer.

Instructor Solution

To maximize throughput, i.e., the number of jobs completed per unit of time, we must use Shortest Job First (SJF) scheduling algorithm, which we know minimizes the waiting time. SJF makes sure that processes with short burst times get scheduled and finish quickly leading to higher throughput.

(d) In Round-Robin scheduling, new processes are placed at the end of the queue, rather than at the beginning. Suggest a reason for this.

Instructor Solution

The reason new processes are placed at the end of the ready queue in RR scheduling is to prevent starvation and to give every process a chance to run. Assume that CPU is running P1 and P2 is in the ready queue. If there is a flurry of new incoming processes P3, P4, P5 …, and the OS always places these new processes at the beginning of the ready queue, then these new processes will be scheduled first before P2 has a chance to get the CPU leading to starvation for P2. But by placing the new incoming processes at the end of the ready queue, RR makes sure that all processes get a fair chance to get scheduled and use the CPU.

Q2 [35 points]. CPU Scheduling (7 points each)

Consider the following 5 processes in a system, where lower values indicate higher priority.

| Process | Arrival Time | Burst Time | Priority |

|---|---|---|---|

| P1 | 0 | 10 | 4 |

| P2 | 1 | 5 | 3 |

| P3 | 2 | 3 | 3 |

| P4 | 5 | 4 | 2 |

| P5 | 6 | 2 | 1 |

For each of the 5 scheduling algorithms given below, draw a Gantt chart illustrating the execution of these processes and compute the turnaround time and waiting time for each process.

Note: At any given time, a newly arriving process is put to the back of the ready queue before the executing process is preempted. That is, the preempted process is always the last process in the ready queue. If there is a tie in scheduling between two processes, the process with the smaller id is preferred.

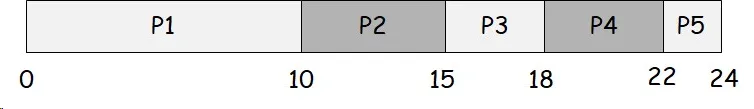

(a) First Come First Served (FCFS)

Instructor Solution

| Process | Turnaround Time | Waiting Time |

|---|---|---|

| 1 | 10 | 0 |

| 2 | 14 | 9 |

| 3 | 16 | 13 |

| 4 | 17 | 13 |

| 5 | 18 | 16 |

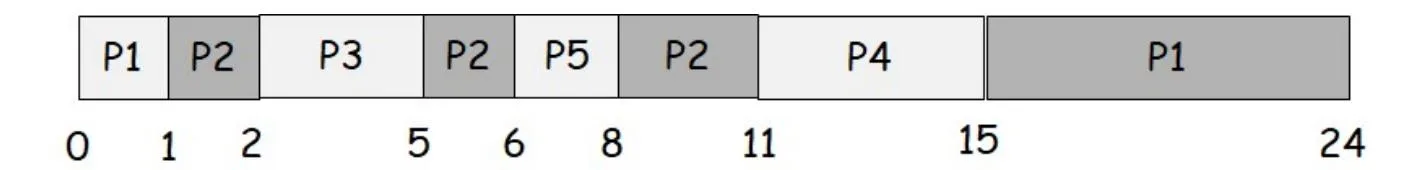

(b) Shortest Remaining Time First (SRTF)

Instructor Solution

| Process | Turnaround Time | Waiting Time |

|---|---|---|

| 1 | 24 | 14 |

| 2 | 10 | 5 |

| 3 | 3 | 0 |

| 4 | 10 | 6 |

| 5 | 2 | 0 |

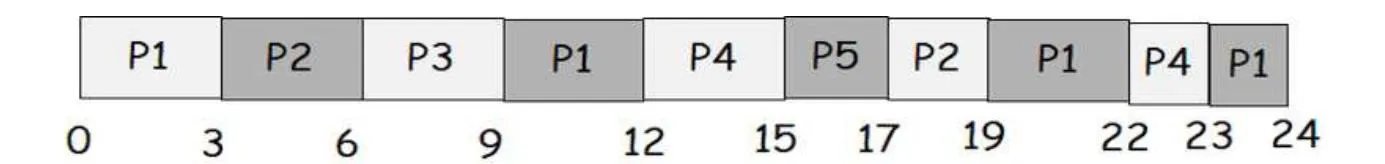

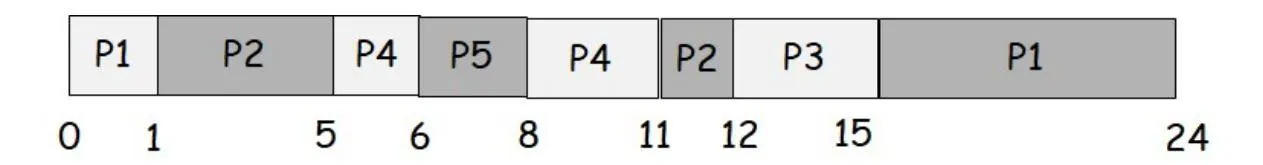

(c) Round Robin Scheduling with a time quantum of 3

Instructor Solution

| Process | Turnaround Time | Waiting Time |

|---|---|---|

| 1 | 24 | 14 |

| 2 | 18 | 13 |

| 3 | 7 | 10 |

| 4 | 18 | 14 |

| 5 | 11 | 9 |

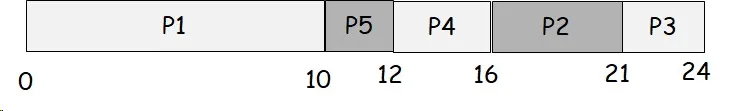

(d) Non-preemptive Priority Scheduling

Instructor Solution

| Process | Turnaround Time | Waiting Time |

|---|---|---|

| 1 | 10 | 0 |

| 2 | 20 | 15 |

| 3 | 22 | 19 |

| 4 | 11 | 7 |

| 5 | 6 | 4 |

(e) Pre-emptive Priority Scheduling

Instructor Solution

| Process | Turnaround Time | Waiting Time |

|---|---|---|

| 1 | 24 | 14 |

| 2 | 11 | 6 |

| 3 | 13 | 10 |

| 4 | 6 | 2 |

| 5 | 2 | 0 |

Q3 [15 points]. Multi-threading

You are given an unsorted array A[0..N-1] and a “key”, and you are asked to find the number of times a key exists in A in parallel as follows: The main thread will create 4 threads, each thread will search N/4 of the elements and terminate. The main thread will then wait for the termination of the worker threads, compute the overall search result, print the number of times the key exists in A on the screen and terminate. If the key does not exist in the array, then print “Key does not exist in the array”. Assume you have 2 functions: (1) CreateThread(F, int arg) creates a thread that starts running at the function F and takes the integer argument arg and returns the id of the created thread, (2) JoinThread(int threadId) is used to wait for the termination of a thread. Assume that array A and key are both global and N is divisible by 4.

#include <stdio.h>

#define N ...

int A[N]; // Un-sorted array to be searched. There can be duplicate values in the array

int key; // Key to be searched

/* Any other auxiliary global variables must be declared here */

int localCount[4];Instructor Solution

Thread function: Executed by each worker thread

void search(int tid) {

int size = N / 4;

int startIdx = tid * size;

int endIdx = startIdx + size;

int count = 0;

for (int i = startIdx; i < endIdx; i++) {

if (A[i] == key)

count++;

}

localCount[tid] = count;

}Main function: Executed by the main thread

int main() {

int tids[4];

for (int i = 0; i < 4; i++)

tids[i] = CreateThread(search, i);

for (int i = 0; i < 4; i++)

JoinThread(tids[i]);

int count = 0;

for (int i = 0; i < 4; i++) {

count += localCount[i];

}

if (count == 0)

printf("Key does not exist in the array");

else

printf("Key exists %d times\n", count);

return 0;

}Q4 [18 points]. Process Synchronization

Consider the problem of building the molecule HO2C3. Assume that there is a stream of Hydrogen, Oxygen and Carbon threads each executing their corresponding thread functions given below. Your goal is to use mutexes and semaphores to synchronize these threads so that one hydrogen, 2 oxygen and 3 carbon threads are paired together to generate one molecule. Then the cycle starts all over again to build the next molecule. Use printf("H"), printf("O") or printf("C") to output one atom. Also, use lock(mutex)/unlock(mutex) to lock & unlock a mutex, and sem_wait(sem)/sem_post(sem) to decrement & increment a semaphore. Make sure that for each molecule, the atoms are output in the following order: HOOCCC.

Initialization Code

mutex_t mutex;

int numO = 0;

int numC = 0;

sem_t semH = 1;

sem_t semO = 0;

sem_t semC = 0;Instructor Solution

void Hydrogen() {

sem_wait(semH);

printf("H");

sem_post(semO);

sem_post(semO);

}void Oxygen() {

sem_wait(semO);

printf("O");

lock(mutex);

if (++numO == 2) {

numO = 0;

sem_post(semC);

sem_post(semC);

sem_post(semC);

}

unlock(mutex);

}void Carbon() {

sem_wait(semC);

printf("C");

lock(mutex);

if (++numC == 3) {

numC = 0;

sem_post(semH);

}

unlock(mutex);

}Q5 [16 points] Short Answer Questions (4 points each)

(a) What’s a Critical Section? What is mutual exclusion? Write a simple code to show how you would achieve mutual exclusion in a critical section using a mutex lock.

Instructor Solution

A critical section is the part of the code where a shared resource (a variable, a buffer, a data structure, etc.) is being accessed and modified. Mutual exclusion is to make sure that in a multi-threaded code, there is only one thread inside the critical section. To achieve mutual exclusion in a critical section, we use a mutex lock as follows:

pthread_mutex_t mutex;

pthread_mutex_lock(&mutex); // Grab the lock

// <Critical Section>

pthread_mutex_unlock(&mutex); // Release the lock(b) Briefly explain why spinlocks are not appropriate for single processor systems yet are often used in multiprocessor systems.

Instructor Solution

A spinlock is one where the thread that is trying to enter the critical section must spin in a while loop trying to grab the lock, which is owned by another thread that is already inside the critical section. In a single CPU system, the thread spinning on the lock will simply waste CPU cycles until the scheduler takes the CPU away from it and schedules the thread that is inside the critical section, which then makes progress and leaves the critical section so that the spinning thread can get re-scheduled and finally enter the critical section. In a multi CPU system, spinlocks are used because they may prevent a context switch as follows: Assume that the thread inside the critical section is about to leave the critical section when the second thread arrives and starts spinning on the lock. Because we have two CPUs, one thread will be running in CPU 1 and the other will be running in CPU2. The thread inside the critical section running say in CPU 1 leaves the critical section very quickly and releases the lock. At that point, the second thread running in CPU 2 and waiting to grab the lock can immediately enter the critical section. Thus, we saved one context switch.

(c) Compare and contrast a mutex lock to a binary semaphore in terms of purpose and ownership.

Instructor Solution

The purpose of a mutex lock is to achieve mutual exclusion in a critical section. The thread that grabs the mutex lock owns this lock and only the owner can release it by unlocking the mutex lock. This also allows recursive locking, where the owner of the lock can potentially lock the mutex multiple times and unlock it the same number of times to release it. The purpose of a binary semaphore is signaling between different threads and there is no ownership of a binary semaphore. It is manipulated by two operations (wait/post, P/V), which are used to atomically decrement the value of the semaphore or to atomically increment the value of the semaphore. Semaphores are used for thread coordination and notification but should NOT be used to achieve mutual exclusion in a critical section.

(d) Briefly explain what priority inversion is and how bounded priority inversion occurs.

Instructor Solution

Consider two threads T1 and T2 with T1 having higher priority than T2. Assume that T2 runs first and enters the critical section grabbing the lock. While T2 is running inside the critical section, T1 arrives (is added to the ready queue). Because T1 has higher priority and the scheduler employs a pre-emptive priority scheduling algorithm, the scheduler preempts T2 and schedules T1. Now T1 tries to enter the critical section but gets blocked on the lock protecting the critical section because T2 is inside the CS. So, T2 gets scheduled again, finishes its job inside the CS and leaves the CS releasing the lock. After T2 releases the lock, T1 is moved back to the ready queue and gets scheduled and enters the CS. As you can see, although T2 has a lower priority than T1, it continues running because it holds the lock and will continue using the CPU until it reaches the end of the CS and releases the lock. This is called “bounded priority inversion” problem, and this is caused by locking. To prevent this problem, you need to avoid locks and achieve mutual exclusion using lock-free mechanisms, which are quite difficult to implement.